Sophia Protocol

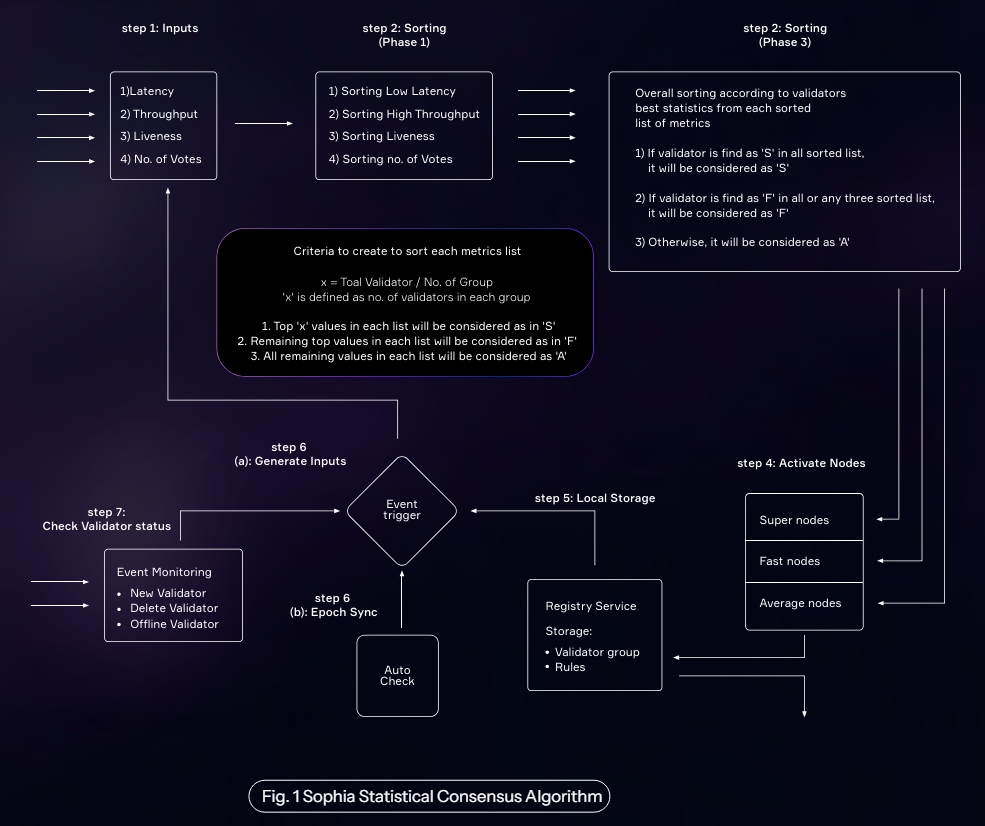

Sophia utilizes a set of rules designed to analyze and interpret the metrics data of nodes, uncovering their functional capacity to participate in the network. Through this application, three categories of validators are activated to process a broad spectrum of transactions submitted at varying fee rates. This mechanism enhances the block production process by expanding the chain’s capabilities, setting the standard transaction fee remarkably low based on previously mentioned average metrics. This standard cost applies to all transactions created and submitted by end-users, ensuring their rapid processing is comparable to high-fee transactions on other blockchains

Periodically, Sophia evaluates individual statistics and generates a list categoriz ing validators into three groups. The Deep Learning Mechanisms oversee the PhronAi block sequence holistically, identifying any anomalies and initiating a Machine Learning auto-response mechanism to mitigate the risk posed by any potentially malicious party. Furthermore, validators within these groups are tasked with processing transactions immediately, based on fee values, benefiting end-users, node owners, and developers alike.

Validator participation within the network is carefully assessed using various metrics, which, after each mechanism cycle, serve as inputs for the next. Should a validator exhibit reduced participation, its metrics are recorded as low. With low metrics as inputs, there is a possibility of category shifts among validators, from super node to fast node or average node. Consequently, PhronAi motivates validators to engage actively in the network by processing blocks that include the maximum number of transactions. The first step involves collecting inputs from useful metrics that a node calculates independently. During the network’s initialization phase, these metrics, serving as input values, are supplied by the genesis file and a self-enforcing smart contract.

Once the statistical algorithm becomes fully operational, metrics input values will also be directly obtained from the event trigger functionality.

Low latency, high throughput, the total number of votes, and maximum liveness metrics are sorted separately. For example, in the case of low latency, the individual matrices of all validators will be used for low-latency sorting. After this process, sorted lists from each metric are passed to the next step. The following details the criteria used in sorting each list of metrics for the super, fast, and average categories.

A variable is declared by defining an equation:

x= Total number of Validators / Number of Groups

The number of validator groups is 3. The top ’x’metrics in each sorted list are considered super metrics. Similarly, the remaining top ’x’metrics in each sorted list are considered fast metrics. All remaining metrics will be considered as average metrics.

Validators are categorized into super, fast, and average nodes based on a sorted list of all metrics. A validator is formally designated as a super node if it consistently appears as a super node in every sorted list. Similarly, a validator is classified as a fast node if it is listed as fast in at least three sorted lists. If these criteria are not met, the validator is designated as an average node. At this point, three comprehensive lists containing the IDs of all validators in the network are compiled, categorizing them as super, fast, and average nodes respectively.

In this phase, validator IDs within the super, fast, and average node categories retrieve their records from databases and caches, leading to the creation of fully functional validator objects ready to participate in the block-producing mechanisms. The registry service temporarily stores the active validator groups from these three categories. These groups are also permitted to engage in the event emission and evaluation process for a specific epoch round.

Concurrently, a self-enforcing event trigger service operates in parallel, generating input signals for the initial phase of the statistical algorithm. This service addition ally generates input signals in reaction to particular events, such as the addition or removal of a validator. Consequently, the data-capturing fields of input objects may be initialized or aligned with input matrices. Moreover, this phase is technically regarded as the concluding step of the statistical algorithm.

This final step also operates in parallel and shares its output with the continuous execution of the process already running in the previous step. Its primary purpose is to monitor the liveness of validators within each group. Should any changes in the liveness status occur, such as the addition of new validators or the removal of existing ones, a reporting event is generated and conveyed through the event trigger service.

Consists of three individual modules operating within their own boundaries yet transcending the functional capabilities internally, directly governing the consensus committee and managing the transaction pool state e.g the tx queue and gas cost overhead.

A. NeuraClassi (The Arbiter)

A method for intelligently selecting an accounting node, relating to fields of blockchain, virtual currency and artificial intelligence, is provided, which includes:

Processing data

Training on processed data

Processing data:

The raw data we have is in the form of numeric values that need to be encoded in categorical values to do so we proposed an algorithm which can convert the given array of data for a variable into its category as super, fast and average depending upon calculations. The raw data shape we get as an input and proposed Arbiter algorithm for calculation are as under.

Node - 1

0.0714

19 (ms)

1.9 (kbps)

3000 (s)

Node - 2

0.0714

18 (ms)

1.8 (kbps)

2500 (s)

Node - 3

0.1071

22 (ms)

1.6 (kbps)

4000 (s)

Node - 4

0.1071

17 (ms)

1.3 (kbps)

500 (s)

Node - 5

0.0714

15 (ms)

1.5 (kbps)

2000 (s)

Node - 6

0.1071

17 (ms)

1.3 (kbps)

200 (s)

Node - 7

0.1428

14 (ms)

1.0 (kbps)

1500 (s)

Node - 8

0.1428

12 (ms)

1.7 (kbps)

800 (s)

Node - 9

0.1785

11 (ms)

2.1 (kbps)

5000 (s)

Arbiter Algorithm:

Get data of different nodes having Power Ratio, Average Latency, Successful Throughput, Liveliness.

Sort the values of each data column in the form of arrays.

Calculate the X factor using the formula:

Where the total number of nodes is the number of nodes running inside the network and Group of nodes are types of nodes that want to classify. In our case we are categorizing the nodes into super, fast and average so the group of nodes is equal to 3.

Divide the list into X_factor sublists based on sorted values (Average, Fast, Super) in such a way that first list is assigned as Super, second list assigned as Fast and last list assigned as Average.

If some values overlap within multiple sublists then these values should be associated with the previous sublists.

Encode values in such a way to assign super, fast, average category to sublist 1, sublist 2 and sublist 3 respectively.

As the AI models work well on numerical categories we then assign 0 to average, 1 to fast and 2 to super values in the final list.

The output of the data after this algorithm is as follows.

Node - 1

0

0

2

2

Node - 2

0

0

2

1

Node - 3

1

0

1

2

Node - 4

1

1

0

0

Node - 5

0

1

1

1

Node - 6

1

1

0

0

Node - 7

2

2

0

1

Node - 8

2

2

1

0

Node - 9

2

2

2

2

AI Arbiter Model

The AI Arbiter Model is a deep learning approach designed specifically for node type detection within blockchain networks. This section outlines the architecture, mathematical formulation, training procedure, and evaluation metrics associated with the AI Arbiter Model.

The AI Arbiter protocol aims to classify nodes within a blockchain network into different types based on their behavior, role, and network attributes. By accurately identifying node types such as Super nodes, Fast nodes and average nodes, the model assists in network management to take governance decisions based on AI module output which will help to overcome the problems described above in protocols.

Model Architecture:

We propose a deep neural network architecture tailored for node type detection in blockchain networks. The model comprises multiple layers, including input, hidden, and output layers. By utilizing dense layers and appropriate activation functions, our model aims to capture intricate patterns and relationships within the input data.

Different types of layers include:

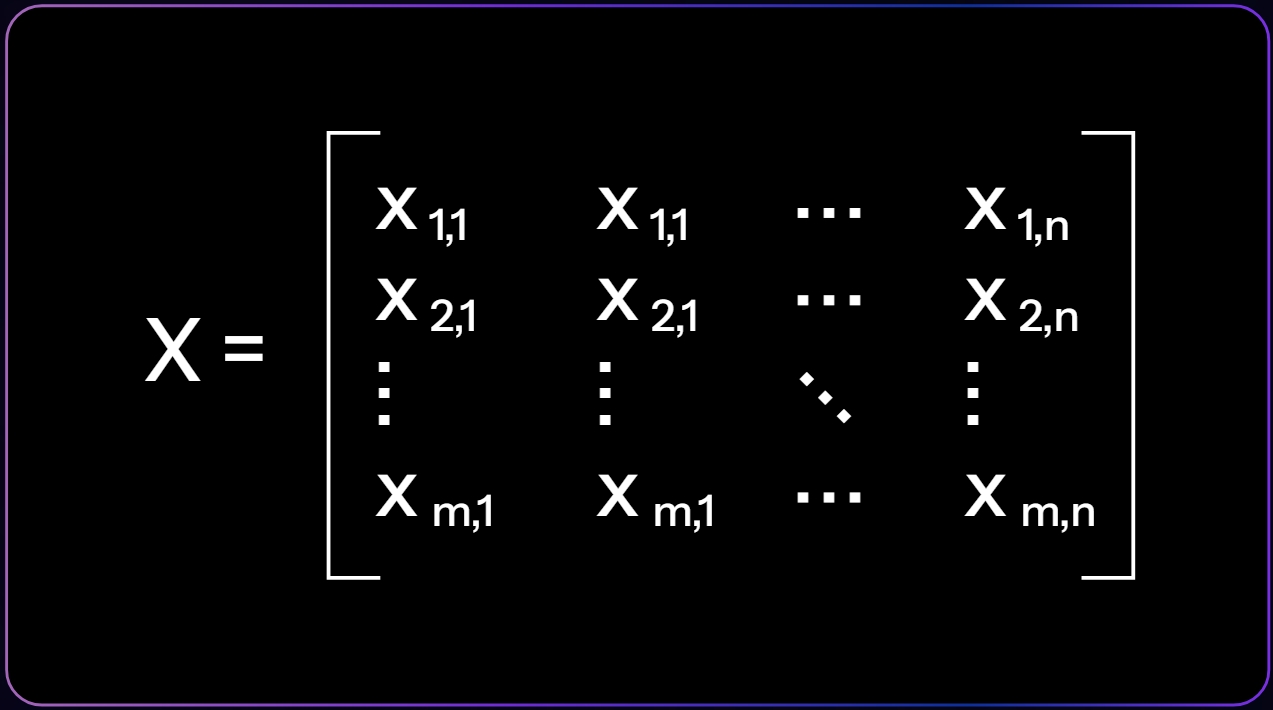

Input Layer:

The input data matrix X consists of features representing each node in the blockchain network. These features could include encoded parameters such as Power Ratio type , Average Latency type, Successful Throughput type, and Liveliness type.

Here, m represents the number of nodes, and n represents the number of features associated with each node.

Hidden Layers:

The hidden layers introduce non-linearity into the model, enabling it to capture complex relationships within the input data. Each hidden layer l is computed as:

Where W(l) denotes the weight matrix, b(l) represents the bias vector, and f is the activation function applied element-wise.

Output Layer:

The output layer produces predictions for the node types. Since this is a multi-class classification problem, we use a softmax activation function to obtain the probability distribution over the classes. The output Y is computed as:

Where W(out) and b(out) are the weight matrix and bias vector for the output layer, respectively. L denotes the index of the last hidden layer.

Training Procedure:

The model is trained using a suitable optimization algorithm such as stochastic gradient descent (SGD), Adam, or RMSprop. The objective is to minimize a suitable loss function such as categorical cross-entropy, which measures the dissimilarity between the predicted probabilities and the true labels. The annotated data sample shown above is used during the training process.

Evaluation Metrics:

To assess the performance of the model, evaluation metrics such as accuracy, precision, recall, and F1-score can be computed on a held-out validation set or through cross-validation.

B. NeutraGuard

Performs as two way watchguard between NeuraClassi and SophiaExec, providing Guardrails to control any Emergent, Hallucinative or Suspicious behavior produced from relative modules, takes some predetermined measures and falls back to LockDown state to mitigate the situation as:

Blocking AI influence to consensus or tx pool conditionally

Switching to the state of defaults to keep network going smoothly

The state could be triggered in case of any anomaly detection

Controls how long the LockDown stays or it could only be restored through manual governance agreement.

NeutraGuard is also incharge to control the behavior of AI compliance with on-chain state; in case of any disagreement between the states from both modules the protocol will again fallback to LockDown state.

And as data channel telemetry across Sophia Protocol

NeutraGuard Anomaly analysis:

Isolation Forests(IF), similar to Random Forests, are built based on decision trees. And since there are no predefined labels here, it is an unsupervised model. Isolation Forest is a technique for identifying outliers in data. The approach employs binary trees to detect anomalies, resulting in a linear time complexity and low memory usage. Isolation. Isolation Forests were built based on the fact that anomalies are the data points that are “few and different”. In an Isolation Forest, randomly sub-sampled data is processed in a tree structure based on randomly selected features. The samples that travel deeper into the tree are less likely to be anomalies as they require more cuts to isolate them. Similarly, the samples which end up in shorter branches indicate anomalies as it was easier for the tree to separate them from other observations.

Isolation Forests for outlier detection are an ensemble of binary decision trees, each referred to as an Isolation Tree (iTree). The algorithm begins by training the data through the generation of Isolation Trees.

The flow is as follows:

Given a dataset D, a random subsample Ds is chosen and assigned to a binary tree.

Tree branching initiates by randomly selecting a feature from the set of all N features. Subsequently, branching occurs based on a random threshold within the range of minimum and maximum values of the selected feature.

Let xi denote the feature vector of data point i, and feat be the randomly selected feature.

Let thresh be a random threshold.

Branching condition: If xi[feat]<thresh, then go to the left branch; otherwise, go to the right branch.

If the value of a data point is less than the selected threshold, it follows the left branch; otherwise, it proceeds to the right branch. This process splits a node into left and right branches.

Let left(n) and right(n) denote the left and right branches of node n, respectively.

The process continues recursively until each data point is entirely isolated or until the maximum depth (if defined) is reached.

Let max_depth be the maximum depth of the tree.

Recursive termination condition: If max_depth is reached or only one data point remains in the node, stop recursion.

The above steps iteratively construct multiple random binary trees.

Let T be the set of all constructed trees.

Once the ensemble of iTrees (Isolation Forest) is formed, model training concludes. During scoring, each data point traverses through all previously trained trees. Subsequently, an 'anomaly score' is assigned to each data point based on the depth of the tree required to reach that point. This score aggregates the depths obtained from each of the iTrees. An anomaly score of -1 is assigned to anomalies, and 1 is assigned to normal points, based on the provided contamination parameter, which signifies the percentage of anomalies present in the data

C. SophiaExec

SophiaExec is at the core which is actually responsible for executing the business logic of the protocol as follows

1. Block Authoring / Finalizing Committee

Receives the nodes list in a categorized manner and let each node author blocks according to its position in the list, the total block capacity within the given timeframe will be divided as percentages, the nodes will get to author blocks according to their performance measured by the NeuraClassi module. For finality all nodes can take part except for the banned nodes without any distinction for the time being.

Validators categorized for NeutraGuard:

validator: Node that can become a member of committee (or already is) via rotation.validators reserved: immutable validators, i.e. they cannot be removed from the list.validators non_reserved: validators that can be banned from the list.

There are two options for choosing validators during election process:

Permissionless: choose all validators that are not banned.Permissioned::reserved: choose only reserved validators.Permissioned::non_reservedchoose only non_reserved that are not banned.

These conditions provide help in LockDown situations as fallback for different sets of validators.

2. Validator Bans

In order to manage underperforming / misbehaving nodes the ban logic is required which is currently being managed by the root (pallet elections will be replaced once the NeuraClassi matures to involve in committee decisions) but the Ai module is also responsible to ban the misbehaving nodes or choose the committee set according to the given stats.

3. TxPool Rearrangements

Security concern:

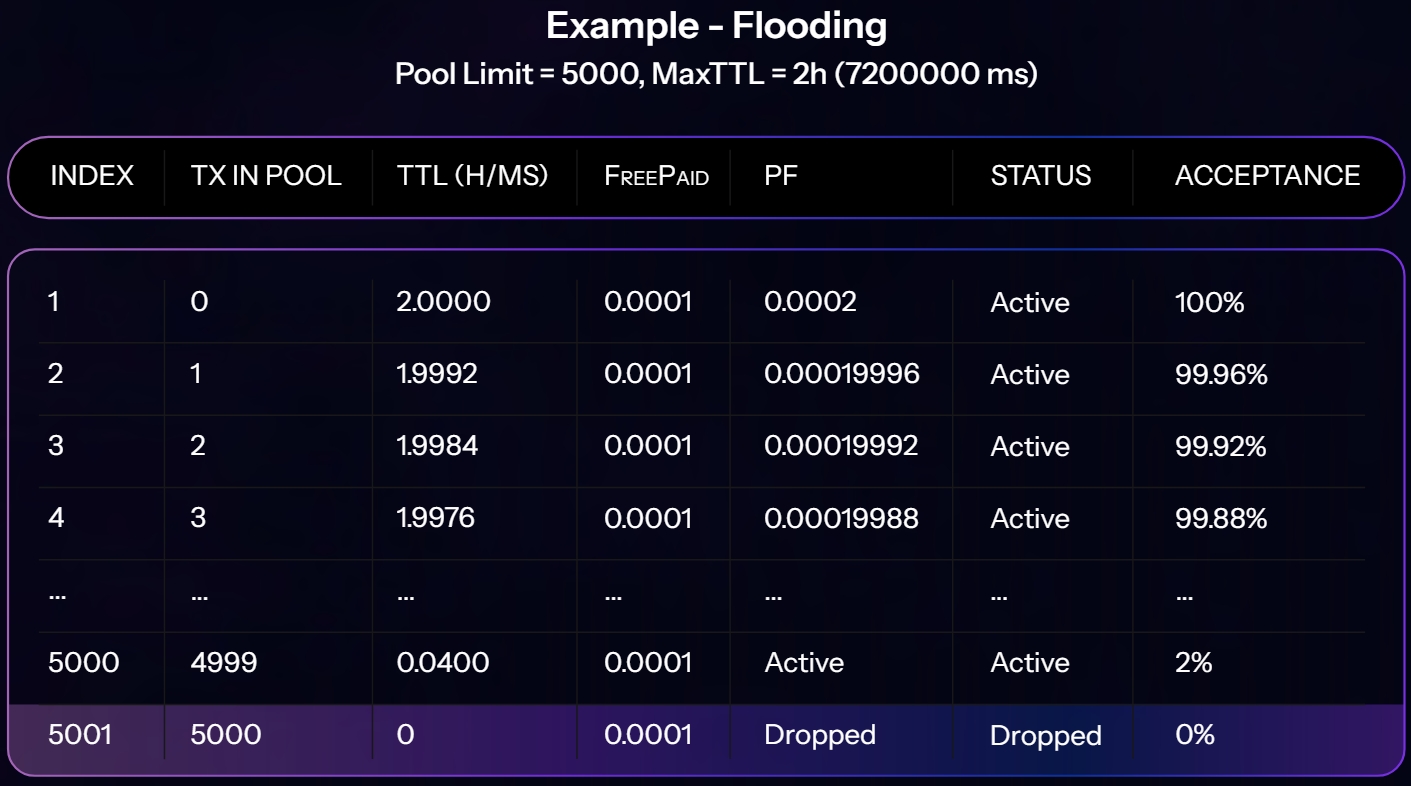

Networks that process low fee transactions are always vulnerable to pool flooding aka DDOS attacks for these scenarios the transaction pool and queue is carefully designed to deal with.

Computation:

Tx in Pool (%) / PoolLimit(%) * MaxTTL = TTL

Pool Capacity - Total Tx in Pool / Pool Capacity * MaxTTL * Fee = Priority Factor

If PF equals or less than last Tx in the pool it won’t be accepted and if it beats one in that case the last transaction will be dropped.

If TTL of a transaction expires that will be dropped automatically.

All other transactions will be put into the ready and future queue according to the status tags.

Priority Factor will let some extremely low fee transactions pass through, as lucky transactions.

Note: The hardware metrics from the node are not supposed to be stored on chain but the resulting decision from the Ai module is, which will be considered a metric itself for further decision making.